This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

This program is free software: you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

Copies of the source code for this software may be downloaded from http://JayReynoldsFreeman.com/My/Software.html. If you cannot find that site or have difficulty using it, contact me by EMail at Jay_Reynolds_Freeman@mac.com.

A copy of the GNU General Public License is available while running the program, via menu item "Warranty and Redistribution" of the Wraith Scheme menu. You may also find the license on line at http://www.gnu.org/licenses.

The Wraith Scheme executable program itself is shareware: You are welcome to use Wraith Scheme for free, forever, and to pass out free copies of it to anybody else. If you would like to make a shareware donation for it, that's fine, and there is information in the program about how to go about it, but in no sense do I request, insist, or expect that you do so. Furthermore, Wraith Scheme is intended to be complete and fully functional as downloaded. There is nothing to buy, there are no activation codes required, and there are no annoying reminders about shareware donations.

Introduction

The Source Distribution

What's New

In Brief

Brief Technical History and Description of Wraith Scheme

Brief Introduction to Wraith Scheme Parallel Processing

On Reading the Code

Motivation and Beginnings

Language, Compilers and Code, Oh My

MPW C

GNU and Unix

Xcode, Cocoa, and more Unix

Scheme by its Bootstraps

Design Influences and Architecture

An SECD Machine as an Architectural Level

Tagged Avals

User Interface Architecture

Scheme Machine Assembly Language

Scheme Machine Microcode

The Scheme Machine

Bringing Up Baby

Views of the Architecture

Main Memory, the Memory Manager, and the Garbage Collector

How Multiple Wraith Scheme Processes Interact with Memory

Types and Typing in Wraith Scheme

Object-Oriented Code in Wraith Scheme

Wraith Scheme Makefiles and Their Uses

A Guide to the Directory Structure

Source Code Versioning for Wraith Scheme

Localizing the Build and Test Environment

Building and Testing Wraith Scheme

Concepts to Know About

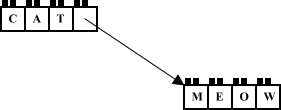

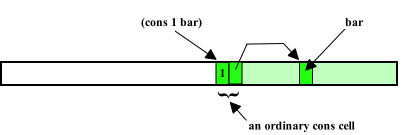

Cdr-Coding

A Note About Forwarding Pointers

Symbols and the ObList

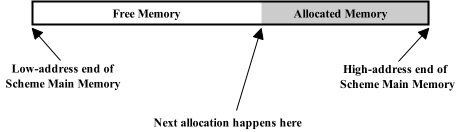

Layout of Scheme Main Memory

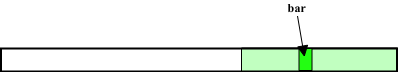

Memory Allocation and Cdr-Coding

World Files

Memory-Mapping and MMAP

Some Background for mmap

Permanent Bindings

Wraith Scheme In Unix Shells

The TTY Implementation

The NCurses Implementation

Files Used in the Cocoa Implementation

The Memory Manager

Allocation Caches

The Clowder

Clowder Responsibilities

Clowder Shared Memory Content

Locking Tagged Avals

A Cautionary Tale

Pointers from Scheme Main Memory

The World Handler

World Handler Issues

Pointers Within Scheme Main Memory

Mismatched Worlds

Big-Endian and Little-Endian

The Garbage Collectors

The Full Garbage Collector

Background

Single-Process Garbage Collection -- A Broad Look

Identifying Non-Garbage

Copying Across

Moving from Low to High

Updating Pointers

Expiring Forgettable Memory

Garbage Collecting of Ports

Bagging Garbage, Other Cleanup, and Compaction

Checking the Memory Spaces for Consistency

Multi-Process Garbage Collecting -- Issues

Multi-Process Garbage Collecting -- Coordinating

Multi-Process Garbage Collecting -- Sharing the Work

Multi-Process Garbage Collecting -- Parallel Worlds

Multi-Process Garbage Collecting -- A Sop to NUMA

Garbage Collection Summary

The Generational Garbage Collector

Generational Garbage Collection

Generations

Memory Space Use for Generational Garbage Collection

Extra Roots for Generational Garbage Collection

Identifying New Non-Garbage

Copying Down

Pointer Updates for Generational GC

Wraith Scheme Events

Background and Apologia

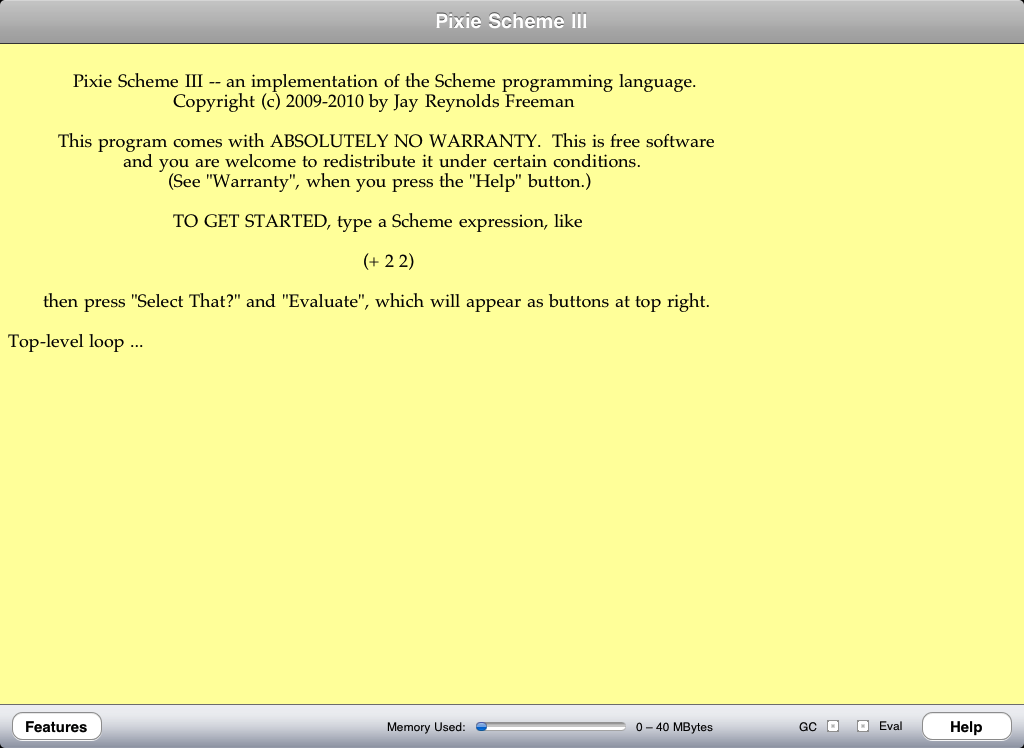

Pixie Scheme and Cocoa Events

Events in the Terminal Implementations

The Scheme Machine Event Loop in the Cocoa Implementation

The Cocoa Event Loop in the Cocoa Implementation

Coordinating the Cocoa Implementation -- Events

Coordinating the Cocoa Implementation -- I/O

The Wraith Scheme Interrupt System

SIGINT Interrupts

SIGALRM Interrupts

SIGUSR1 Interrupts

Summary of SIGUSR1 Interrupt Operation

Precautions for Using Interrupts

Environments and Environment Lists

Particular Data Structures

Local Types

Tagged Avals (Again)

File Descriptors

Data Descriptors

Memory Space Descriptors

Memory-Mapped Block Descriptors

Function and Special Form Descriptors

Type and Arity Checking

The Evaluator

The Evaluation Status Register

Evaluation Stack Frames

Tail Recursion

Adding a New Wraith Scheme Primitive

Initializing Wraith Scheme

Initializing -- Single Wraith Scheme Process

Initializing -- Multiple Wraith Scheme Processes

Initializing -- More about Synchronization

Initializing -- More about the Cocoa Implementation

Building the Default World

The Compiler

The Macro System

The Traditional Macro System

The Hygienic Macro System

Package Management

User Interface Design

Teletype Interfaces

A Teletype With Two Rolls of Paper

Menus, Buttons, and Drawers

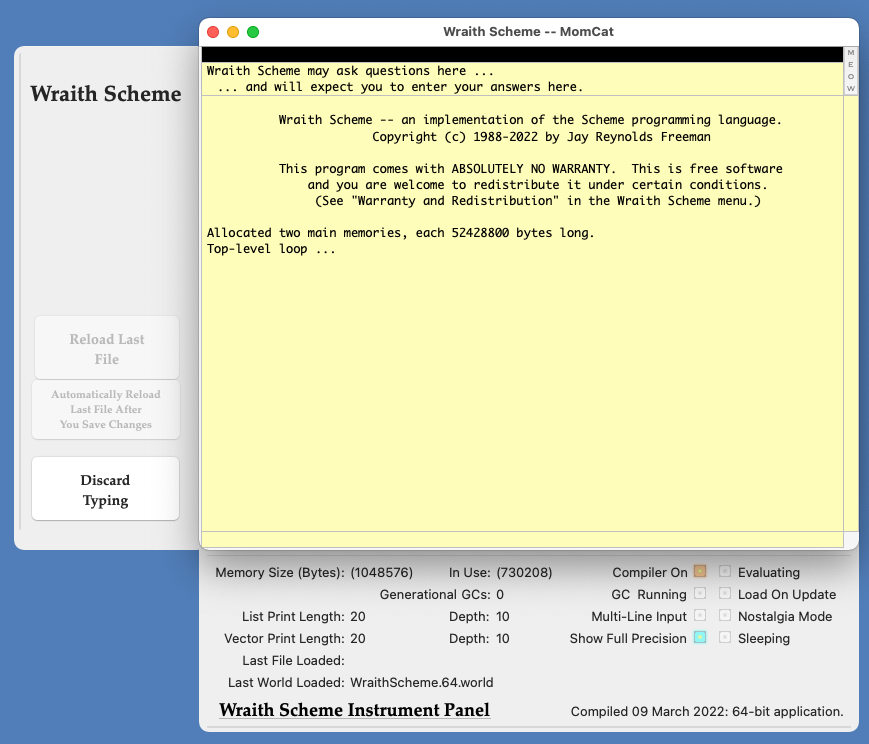

An Instrument Panel

Browser Interfaces

A Magic Slate

Talking to the Magic Mirror

Tracking Typed Text

A Few Bells and Whistles

The Return of Pixie Scheme

Pixie Scheme Design Considerations

Pixie Scheme Scheme Machines

Pixie Scheme II

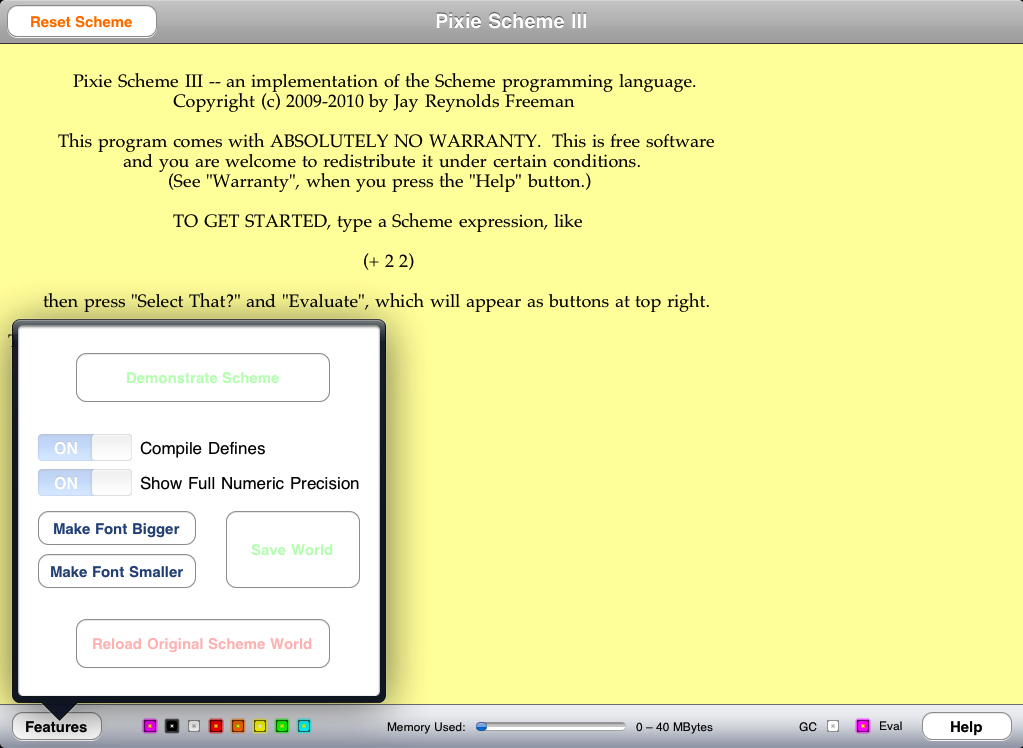

Pixie Scheme III

Things I Might Do in the Future

Things I Probably Won't Do in the Future

Acknowledgments

Bibliography

Welcome to the "Internals" file for Wraith Scheme, an implementation of the Scheme programming language for the Apple Macintosh™. Wraith Scheme was written by me, Jay Reynolds Freeman. By all means Email me about it if you wish.

I decided to write about the internals of Wraith Scheme for many reasons:

This material is more technical than the other Wraith Scheme documentation that I have written. Readers who do not have a certain amount of knowledge about programming in general and Lisp-class languages in particular, may find the going difficult.

I have tried to create the kind of document that I myself have always wanted, but never had, when I have found myself needing to come up to speed on a large and complicated piece of somebody else's code. This document is not a line-by-line guide to the code. It is partly a description of the architecture of the Wraith Scheme program, and partly a background document that gives medium to high-level information about how and why the code was written, and how it is supposed to work. Only you can judge how good a job I have done. By all means let me hear from you if something is missing, incomprehensible, or seems incomplete. I am sure the document has faults, if only because I am too close to my own work to have any sense of what about it is going to be most confusing to a newcomer. Yet I hope it will be useful, even so.

I think that the order in which I write about things herein is more or less the order in which a newcomer to the Wraith Scheme implementation should study them. Yet I could be wrong, and this document is so long that very likely nobody else but me will ever read the whole thing anyway. Indeed, there is probably more here than any one person could possibly want to know about Wraith Scheme, perhaps even me. Notwithstanding, it may help to have as much information in one place as possible.

This document mentions specific files and data structures in the Wraith Scheme source code. Source code is not provided in the download that includes the executable Wraith Scheme application; it is available in a separate, much larger download, from the "Software" page of my web site. The home page of that web site is http://JayReynoldsFreeman.com.

Don't be intimidated by the size of the source distribution. It contains many pictures, many long source files for testing, many long "gold" files for comparison with test results, and a lot of documentation. The actual source code -- C++, Objective C, and some Scheme itself -- comprises about 80000 lines.

The distribution as provided should build and run in approximately version 13.2 of Apple's "Xcode" development environment. That version of Xcode runs on macOS 11.6 ("Big Sur").

Some of the build targets in the Makefile do not require Xcode -- see the section on Wraith Scheme In Unix Shells, later herein. The present software distribution supports versions of Wraith Scheme that do not require the full Macintosh interface.

I do not presently plan to host an open-source development project. Notwithstanding, if there is sufficient interest, and if I can find a suitable place to put a large repository, I would be happy to do so.

If you have found a bug in Wraith Scheme and have code for a solution, or if you have some enhancement you would like to share, by all means let me know, for possible integration into my own next release of Wraith Scheme. I will of course give credit where it is due.

Here is a list of recent substantial changes in the Wraith Scheme "Internals" document, most recent first:

I believe Wraith Scheme is a complete implementation of the "R5" dialect of Scheme (major Revision 5 of Scheme). (The complete guide to that version is the 1998 Revised5 Report on the Algorithmic Language Scheme, edited by Richard Kelsey, William Clinger and Jonathan Rees. That report is available on several Internet sites, such as http://www.schemers.org.) There is one caveat to the word "complete": The R5 report describes a number of optional Scheme features, using language like "some implementations support ...", or something similar. Wraith Scheme does not support all of those features.

For further details as to how Wraith Scheme conforms to and enhances the Scheme specification of the R5 report, see other material that I have provided, such as the Wraith Scheme Help file and the Wraith Scheme Dictionary, both of which come bundled as part of the released program. The document you are reading has to do with the internals of Wraith Scheme, at the level of reading and perhaps even understanding the source code.

The version of Wraith Scheme for which this "Internals" document is prepared is Wraith Scheme 2.27, which is a 64-bit application for the Apple Macintosh, that requires at least macOS 11.6 ("Big Sur") and either Intel microprocessors or Apple's arm-based proprietary processors ("Apple silicon"). Earlier versions of the 64-bit application do exist, starting with 2.00, and there is also a 32-bit version, whose first release was 1.00 and whose most recent is 1.36 as I write these words. Much of the material herein will also apply to those earlier versions. I have not released the 32-bit versions as freeware. (They are not particularly secret; I am just too lazy to support two big open-source distributions). Even so, the descriptions of how things work that I provide in this document may apply to them.

Wraith Scheme was named after my late, lamented, scruffy gray cat, "Wraith". If you don't think that makes sense, remember that it runs on a computer named after a raincoat.

A version of this section also appears in the Wraith Scheme Help file.

I changed to 64 bits and to Snow Leopard or better to allow Wraith Scheme to have a larger Scheme memory than one Gigabyte, and to allow me to use some of the special Macintosh software features that are not available in earlier versions of Mac OS.

In consequence, for a C program, Pixie Scheme was remarkably easy to modify and maintain. For example, it took only a few hours to add support for IEEE 80-bit floating-point numbers to Pixie Scheme, and most of that time was spent writing functions that actually implement operations on these entities, not in tying 80-bit floats into the control-flow structure of the program. Wraith Scheme retains the same ease of modification.

The view is an interface built using Apple's Interface Builder application and its descendents in Xcode, together with code for a number of supporting controllers for specific components of the view. That code is mostly written in Objective C.

The main controller -- the one that sits between the model and the view -- has two parts. The first part comprises a fair number of semi-autonomous methods whose actions are triggered by various events, such as typing "return" in the Wraith Scheme Input Panel or operating one of Wraith Scheme's buttons or menu items. The second part is a timing mechanism, that polls the evaluator thread regularly, to see if it has anything for the controller to do.

A version of this section also appears in the Wraith Scheme Help file.

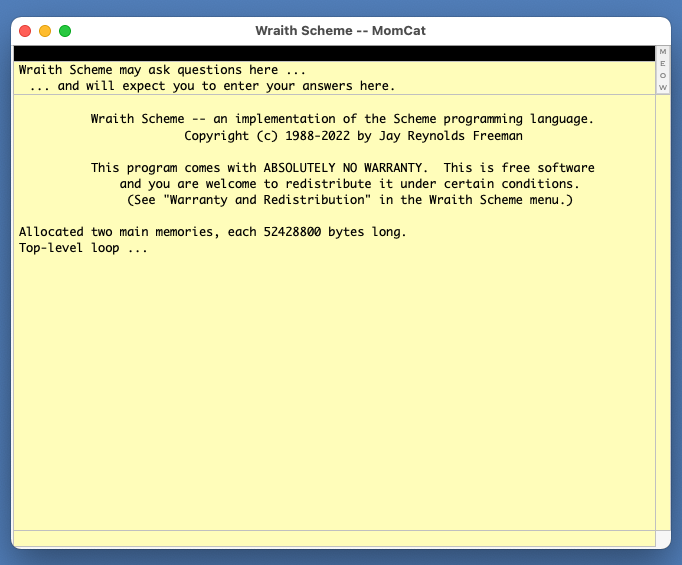

Since Wraith Scheme is named after a cat, I decided to continue the feline metaphor. The privileged Scheme process is called the "MomCat", and the others are called "kittens". The image of a mother cat trying to ride herd on a bunch of rambunctious offspring is perhaps appropriate. (A friend of mine once claimed to have a fifth-degree black belt in the little known Japanese martial art of ikeneko -- which means "cat arranging". Programmers will be familiar with the skill required. Managers of programmers will be extremely familiar with that skill.)

There is no way to add kittens to a group of parallel Wraith Scheme processes that is already running. You may remove a kitten from a group via, e.g., the "Quit" command from that kitten's Wraith Scheme menu, but there is no means to put back a kitten that is gone, or to add a new one if you need more, other than changing the Wraith Scheme preferences and restarting Wraith Scheme.

Each kitten has a number -- its kitten number -- that identifies it uniquely. The MomCat also has a kitten number, which is always zero, and the terms "MomCat" and "kitten 0" are synonyms. The other kittens are numbered consecutively from 1.

Plausible uses for parallel Wraith Scheme might include running completely separate programs, running distinct programs which all need to act on a common data structure, or using one Wraith Scheme process to inspect or monitor another. Separate Wraith Scheme processes may also be useful for monitoring or dealing with asynchronous input or interrupts.

Among the hidden virtues of this kind of parallelism are reduction in swapping due to sharing a common Scheme memory, and leveraging the Unix mechanisms to switch and schedule processes instead of having to write your own.

By "low-level measures", I mean, for example, that I have attempted to maintain the integrity of Scheme main memory by blocking conflicting simultaneous writes.

Thus, for example, if two or more kittens apply "set!" to the variable "x" at more or less the same time, the value of "x" after all the "set!" operations have returned will be a value that one of the kittens intended, but in the absence of additional locking or sequencing mechanisms imposed by the user, there is no guarantee which of the several values of "x" will obtain.

On the other hand, the storage location referenced by "x" should not end up in an invalid state, such as might occur if different portions of the data structure at that storage location had ended up written by different kittens, who were perhaps attempting to write different kinds of values at more or less the same time. Failure to guard against such a race condition might for example result in a tag that said "string" accompanying a data value that was in fact merely an integer, resulting in a crash when some subsequent operation attempted to read the "string" beyond the end of the integer. My means of blocking conflicting simultaneous writes is (knock on wood) intended to prevent such disasters.

Operations like "define", "set-car!", and "set-cdr!" -- indeed, everything that ends with a '!', and some others -- are similarly protected; that is, from the viewpoint of the Wraith Scheme evaluation process, they are what is called atomic operations.

Each application in the cluster accesses memory in two architecturally different ways. First, it accesses memory for its own private use -- notably but not exclusively for the text, heap and stack segments of its C++ and Objective-C operations. Second, it shares with the other applications in the cluster a large memory-mapped area which is a global resource -- Scheme main memory for all applications in the cluster. The protocol for accessing this resource involves lockless coding.

Furthermore, one application in the cluster -- the MomCat -- is special. The MomCat is responsible for coordinating the activities of the other applications in the cluster, when such coordination is required -- notably but not exclusively for garbage collection.

If you do decide to look at any of the source code for Wraith Scheme, you may need to know a few things about my style and preferences.

if( whatever ) {

stuff

more stuff

}

else {

other stuff

still more stuff

}

while( the world turns ) {

this

that

the other

}

I started programming with Fortran II (a most interesting language to use, as the machine we were using it on did not have a call stack) as an undergraduate at the California Institute of Technology in the mid 1960s, and continued to use only varieties of Fortran (generally with call stacks) up through the completion of my physics doctoral thesis project. I became more intrigued with programming languages as time went by, and by the early 1980s I was ready for some new ones. I learned C on a PC I had built at home, Steve Ciarcia's MicroMint MPX-16, running a 4.7 MHz Intel 8088 and CPM-86, using the DeSmet C compiler.

(What fun bringing up a computer from a bare board! I remember making a careful aesthetic judgment about what color bypass capacitors to use; I went for California-mellow earth-tone brown. Also, I am not sure the DeSmet folks ever forgave me for pointing out a typographical error in the source code for their compiler on the basis of a disassembly of generated code.)

A 16-bit C system was neat to learn with, but not quite as capable as I wished. Fortunately, my employers had a Digital Equipment Corporation 20/40 mainframe computer that mostly sat idle, and I had a VT-100 terminal connected to it sitting on my desk at work. At the time, the DEC-20 was considered a very serious machine, with a whopping 256K words of 36-bit memory. (No typo, I do mean "36 bits". Remember, if you don't have 36 bits, you aren't playing with a full DEC.) Although it did not have support for any language nearly as modern as C, there was some interesting older arcana to be had from the DEC User's Group, DECUS.

It had already occurred to me that a good way to widen my horizons as a programmer might be to try my hand at a language that was as different from Fortran as I could find. Now there was an obvious choice, because the DECUS tapes had MACLisp, a venerable and very fine classic Lisp implementation based in great part on work done at MIT during the previous decade. So I spent many hours before work in the morning, or after work at night (and it was not always entirely clear which was which), with a copy of Winston and Horn's "Lisp" in hand, learning the language and becoming impressed with its power and versatility, especially compared to Fortran II.

My next job involved helping to implement a data-analysis scheme based on category theory in Lisp. It would have been fascinating if I had understood it. The Lisp implementation was "RLisp", a chimera involving a rather standard Lisp with an Algol-60-like syntax layered on top of it. (Caution: "RLisp" now most often refers to a Lisp embedded in Ruby -- it didn't then.)

Then I got hired by FLAIR -- Fairchild Laboratory for Artificial Intelligence Research -- it would have been infelicitous to make an acronym out of Fairchild Artificial Intelligence Laboratory -- just about when Schlumberger bought Fairchild, spun off most of it, and renamed the AI lab to SPAR (Schlumberger Palo Alto Research). SPAR was a world-class facility, literally down the hill from Xerox PARC -- which led to discussion of whether SPAR meant Sub-PARc, and to frequent citation of the venerable Psalms 121:1, freely translated: "I will lift up mine eyes unto the hills, from whence cometh my inspiration." It had a veritable insectarium of wonderfully-named Symbolics 3600 Lisp machine workstations, like "Moaning Milky Medfly" or "Praying Green Mantis". (The basic namespace was insects, with one adjective to indicate whether the particular machine had a speaker or not, and another to indicate whether its display was monochrome or color, and yes, "Praying Green Mantis" was a deliberate pun.)

I was way over my head in terms of theoretical knowledge and Lisp coding skills, but I didn't tell anyone, and besides, what fun is swimming when you can touch the bottom with your feet.

Lisp continued to fascinate me, but the standard varieties of the language were evolving into monstrous constructs. Zetalisp, the Symbolics dialect when I first encountered the machines, was huge, and its closely-related replacement, Common Lisp, was bigger still. Furthermore, the language had a few warts that purists might object to, like not treating functions quite the same as other data, and like handling recursion with a big call stack when it wasn't strictly necessary.

I was becoming interested in enhancements to Lisp, to deal with things of personal interest, such as parallel programming. (Most of my career in computer science has been spent dealing with parallel hardware in one way or another.) That would require a Lisp implementation that I could modify, and the thought of doing so with something as gargantuan as Common Lisp was daunting.

About then, someone -- it may have been Judy Anderson -- suggested Scheme as a small, elegant Lisp. There was an implementation on the 3600s -- implementing one Lisp in terms of another is a time-honored tradition. I played with it and found it righteous. I bought a commercial implementation for my shiny new Mac II, but became suspicious of its quality when I asked if 1.0 was an integer and it said "no" (that is, (integer? 1.0) => #f) . I already had plenty of reasons to write my own implementation, but that was the straw that broke the camel's back, and I was off.

Wraith Scheme's source code comprises a whole lot of C++, modest amounts of Objective-C and of Scheme itself, and one file containing assembler embedded in C++ functions, whose purpose is to permit use of "locked" test-and-set and test-and-clear assembly language instructions for locking objects in Scheme main memory while they are being accessed. A historical perspective might help understand how all this stuff fits together, and why I did it the way I did.

When I started writing code for a Scheme interpreter, in 1987, it was a different era. C++ seemed promising, but there were no decent compilers for it yet. A Megabyte was a lot of memory, ten MHz was a really fast processor, "Gnu" was the common name of an esoteric species of silly-looking African ungulates, and "open-source" was a failure mode in MOSFET transistors.

Apple did support an object-oriented programming language -- Object Pascal -- but I had little experience with Pascal and did not particularly like it. Apple had a reasonable C compiler, bundled with its Macintosh Programmer's Workshop development environment, but it was not even ANSI-standard C yet -- function prototypes were optional, and so on through a long list of abominations. Mac programs were single-threaded, you were supposed to roll your own event loop, and oh, by the way, yes, it was indeed great fun hunting brontosaurus with all the clan.

But I knew C and had been programming in it for years, function prototypes or not.

So, after demonstrating enough stubbornness to terrify a cat and spending enough spare time to prove to anyone who might have cared that I desperately needed a life, I had in hand a reasonably functional R3 Scheme -- Pixie Scheme -- and was even bold enough to release it as shareware. It made almost twenty-five dollars altogether -- that is gross receipts, not net -- but the lift to my ego was priceless, and it was a lot of fun getting it going.

Then I began to suspect that a 16-MHz machine with only 5 MByte of usable address space was probably not sufficient hardware to tackle the artificial intelligence problems that interested me; at least, not with any program that I was capable of writing. (That was my 1987 model Mac II, with a RAM upgrade, and it was arguably the most powerful personal computer on the market at the time I bought it.) So I shelved Pixie Scheme and did other things for a decade or two.

Fast-forward to 2006, when I finally got around to replacing my tired Mac II with a shiny new Macbook 13. (One of the virtues of working in the computer industry is that you can get big companies to give you all the toys you want to play with, so you don't need to keep buying them for home.) It dawned on me that the most important change in personal computers in two decades had been to replace "Mega" with "Giga" in all the places that mattered. My new Macbook had 1000 times as much memory, 1000 times as much disk space, and by any reasonable criterion 1000 times as much processor power as its predecessor. Perhaps Pixie Scheme might run a little faster on such a machine.

How do you port thirty thousand lines of twenty-year-old code to new languages, new operating systems, new libraries, and new programming paradigms? I didn't know. I still don't. Fortunately, I didn't bother thinking about it -- if I had, I like to think I would have had enough sense to run screaming. I just pressed on regardless.

First, I had to recover the source. It was in a BinHex archive that I had squirreled away on a Unix machine, but somewhere along the line I had foolishly passed the archive through software that substituted something like user name and date for one of those useless two-character strings that nobody would ever care about except when it turns up in places like BinHex archives. I had to hand-edit the archive file before I could expand it successfully and retrieve the source.

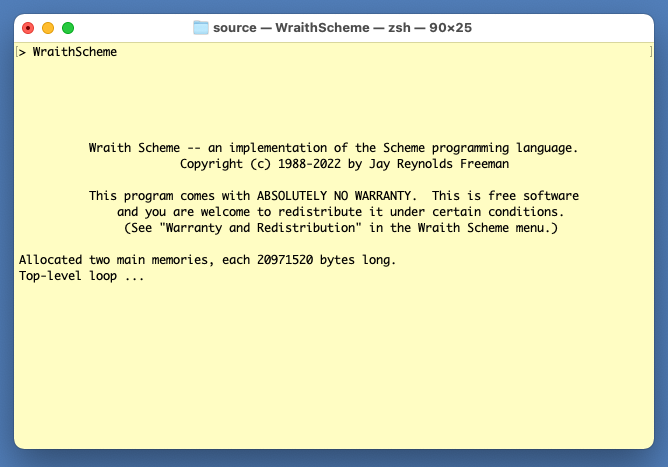

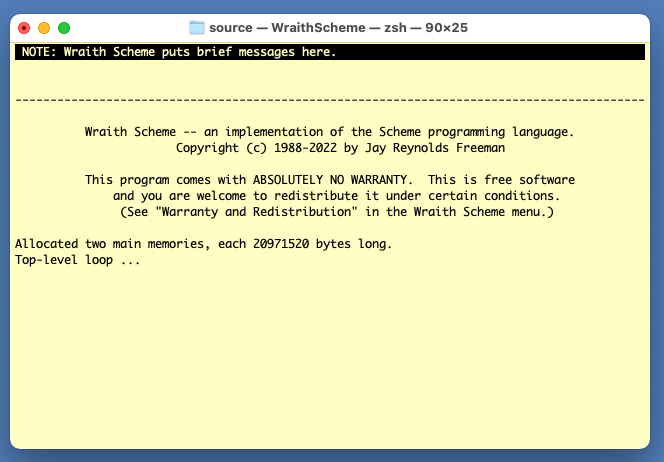

I decided to port to the modern Mac by way of an intermediary with a classic Unix stdin/stdout interface. Thus I could get the heart of the code -- the Scheme machine -- running again, while using a well-established and uncomplicated user interface.

Ripping out the Toolbox calls was no problem. I had always anticipated that some day, Pixie Scheme might run in a different environment. So I had reasonably encapsulated the Toolbox stuff in a separate file with a tolerably well-defined interface to the rest of the code, kind of like the model-view-controller paradigm, but a bit before its time. Sometimes I do something right: It turned out not to be a big deal to install a simple stdin/stdout interface in its place.

My hand-rolled event loop remained not only viable but also very straightforward. It had only a few events that required reporting to the user, a few places to stop and wait for the next character, and a simple signal-handler for control-C, for when the user wanted to get the program's attention while it was doing something else.

In this form, my resurrected program came up and ran fairly quickly. The GNU C compiler compiled nearly all of the original code, with no changes.

I decided to press development forward on two fronts. I continued to use the Terminal version of the program to shake down and refine the Scheme machine, and started a separate line of development toward a thoroughly modern Mac graphical user interface (GUI) to wrap around it.

On the "Scheme machine" effort, I bit a big bullet: I changed all the .c file names to .c++ and ran them through the GNU C++ compiler -- I think it was 3.0 -- with "all" warnings enabled (-Wall), and fussed with the code till I got an error-free compile. That shook out a lot of fluff, but I didn't find any real crashers. I had of course been using lint-like utilities since square one of development, and had tried scrupulously not to cut corners in coding style. Been there, done that, got the tee shirt: It's bloody.

Sometime about there I decided to rename the project. Thus Wraith Scheme was born.

I found more errors later when I switched from a 32-bit application to a 64-bit one. I had created typedefs to call out "full-sized" words from the start, but there was nothing like changing "full-sized" from 32 to 64 bits to find places where I had not used them consistently.

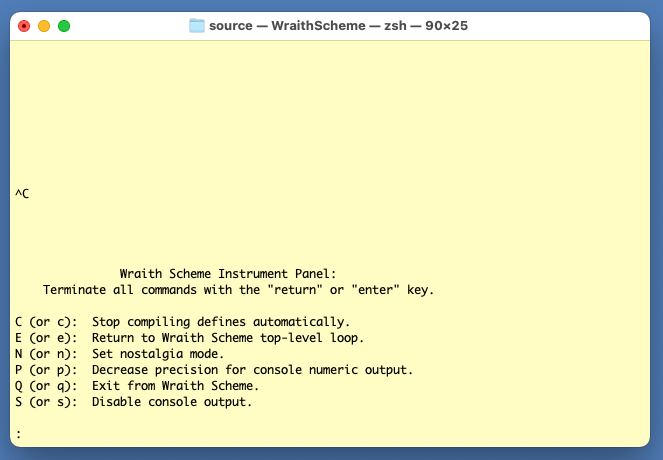

On the interface side of things, I had used NeXT hardware and software in the mid 1990s. Thus I knew that Objective C was a straightforward insertion of a Lisp function-calling mechanism into plain vanilla C, and I was familiar with early versions of some of the Xcode tools, and with the enormous libraries of NS-what-have-you stuff that supported code development. I even remembered that "NS" stood for "Next Step". Thus I was off to a good start getting a Macintosh GUI written with these tools, but it took a while to shake off the rust. Furthermore, it rapidly became clear that the simplest way to adapt my Scheme machine so that it least gave the appearance of doing things <fanfare>The Macintosh Way</fanfare> would be to run both it and its hand-rolled event loop, in a separate thread. Synchronization and communication between that thread and the one running the UI was going to be tricky. I decided to procrastinate, and write another version of my Scheme, with a user interface that was more complicated that stdin/stdout, but less complicated than a full-blown canonical Macintosh application.

I ended up doing an implementation whose interface was based on an older and more widely-available Unix ASCII-graphics system: I got a version of Wraith Scheme up and running in a Terminal shell using ncurses. That was interesting in its own right -- I had never used ncurses before, and Apple's support for it had a few bugs. Yet the project gave me additional, valuable experience at abstracting the requirements for a GUI and for its encapsulating controller and interface code, away from the Scheme machine.

I have continued to maintain and test all these versions of Wraith Scheme: My regular builds create and test the one with the Mac GUI interface, the one with ncurses, and the one that just uses stdin and stdout. The two terminal versions lack much of the GUI window dressing of the Mac build, and they do not implement parallel processing. Nevertheless, they are occasionally useful when I break the Mac GUI build, and would be good place to start if I ever port Wraith Scheme to another architecture -- or if you do.

If someone out there is wondering why I didn't try an X11 graphical user interface, the answer is simple. I didn't know anything about X11, and I still don't.

As Wraith Scheme developed, I took to writing some of its code in Scheme itself. That worked fine, but there was an interesting problem when it came to the implementation of hygienic macros. The code to create and expand them is written in Scheme, and makes frequent use of Scheme constructs which themselves are defined as macros. Thus there is a chicken-and-egg problem: How can you use macros to expand their own definitions into a form where you can use them?

The solution I chose was bootstrapping, and I have since learned that that solution is common. I wrote some Scheme routines that would expand the code that defined hygienic macros into the underlying low-level constructs that do not use macros, and write out the expanded macro code into files. Getting those files right for the first time was quite a job -- I had to hand-expand all the macros. When it was done, though, I had an easy way to build Scheme worlds and propagate changes. When I create a Wraith Scheme world, I load in the expanded macro files, so that the macros can be used. Then I use my Scheme routines that expand macros, to generate new versions of the expanded files. If the new ones agree with the old ones, all is well.

Not every function in every file that goes through the bootstrapping process has its macros expanded. Not all of the functions are required to get the macro-processor and compiler running. Since the first bootstrap was done by hand, with some difficulty, I arranged to bootstrap only a minimal set of functions.

I used the same trick, of maintaining old files with the macros expanded and generating new ones in each build, for all the other Scheme code that goes into a Wraith Scheme world. I took care to provide plenty of software support in my own development environment, even above and beyond normal version-control stuff, to be sure not to overwrite any set of expanded files prematurely. I also keep a few recent versions of Wraith Scheme, complete with worlds, on hand, so that I can run my macro-expansion software on the relevant Scheme source if I have somehow broken the current build of Wraith Scheme.

I have often wondered whether bootstrapping works for chickens as well. All I really know is that I once heard Steven Hawking asked which came first, the chicken or the egg, and he answered that it was definitely the egg. So I guess I will go with that.

Technical Note: It eventually occurred to me that Hawking was absolutely right: The amniotic egg, with its waterproof enclosure, evolved more than 300 million years ago, but the chicken is a modern species. I wonder if anyone ever asked Hawking why the chicken crossed the road.

Probably the best single thing that happened to my Lisp-implementation project was that I was deprived of a personal computer at the time I was beginning to work seriously on it. I was in the process of moving from Palo Alto to Santa Cruz, and had started to box things up, including my home computer. Then, for one reason or another, the move got delayed for the better part of a year. With no computer at hand, I could not make the classic mistake of just starting to code -- instead, I had to do serious design, and think about what I was doing at an abstract level, and that was an enormous benefit.

Designing a Lisp implementation involves architectural decisions of many kinds, at many levels. For example:

Some of those questions are answered when discussing the type of Lisp to be implemented -- such as whether functions get treated as a different kind of entity than other data. Some -- such as how to reclaim storage, or "collect garbage" -- are a bit below the language implementation, but are usually described in terms of abstract algorithms at a level considerably higher than machine language or hardware. Some, such as how the user interacts with the system, are at an architectural level even higher than the language itself. Some have to do with deeply-buried details of how the system works, details that might not ordinarily be the least bit visible to a user.

Fortunately, much of my professional work had dealt with architectures explicitly, and many of the sources I had been reading to learn about Lisp focused intensively on one or another specific architectural level. Thus I was nudged into thinking about my nascent design in terms of the different layers of the system architecture -- how would each one work, and how would they communicate with one another. That helped a lot.

At some point I should probably mention that both of my parents were architects -- the kind who design buildings. Maybe that helped with my mind-set. Or maybe the old joke about programmers, woodpeckers, and the destruction of civilization struck a little too close to home.

One reference that was particularly useful was Peter Henderson's Functional Programming Application and Implementation. Henderson's focus was on applicative programming, encompassing the particular meaning (of several that are common for the term) that the value associated with a variable name never changes once it is assigned.

Lisps in general and Scheme in particular are not applicative languages. Notwithstanding, Scheme could reasonably be said to be almost one, in the sense that Scheme -- well, R3 Scheme, at any rate -- differs from a pure applicative language only in having a few well-defined operations -- all the ones that end with a '!' -- that change the values associated with variables.

Furthermore, what Henderson did to illustrate a functional language, was to describe the implementation of a small Lisp-like functional language based on the machine language of a particular kind of virtual Lisp machine -- an imaginary CPU -- called an SECD machine. Before I go into the details of what all that is about, let me state clearly the major point that sank home from this illustration:

Thus, getting a bit ahead of myself, the broad structure of my clever plan was to:

An SECD machine is a relatively simple construct that sounds a lot like the kind of toy-like CPUs that many of us studied during our introduction to computer hardware. S, E, C, and D are registers in the virtual hardware, each pointing to a push-down stack or list in the virtual machine's memory. The letters stand respectively for Stack, Environment, Continuation, and Dump.

The Stack is not a function-call stack, it is the kind of stack for arithmetic calculations that you encounter in Forth or in an RPN calculator. Thus, if you want to add A and B, the code for the virtual machine looks like this:

PUSH A PUSH B ADD

whereupon the sum of A and B is left on top of the stack.

The Environment register points to some kind of data structure for use in looking up the values bound to variables. In a lexically scoped language, such as Scheme, it might point to a big list that was the concatenation of all the environments from innermost out to top level, or perhaps it would point to a list of those environments, depending on the implementation.

The Continuation is perhaps best thought of as "where the program counter points". It represents an instruction stream, typically what is required to finish the function currently being processed.

The Dump corresponds more or less to a function-call stack: When you call a function, you wrap the current Stack, Environment, and Continuation up in a package of some sort -- typically a list -- and push it onto the Dump. When the called function returns, you pop the Dump and restore those registers from what you get. Note in particular that every function application can have its own Environment in which to evaluate variables, and that on the occasion of a tail-recursive function call, you do not strictly need to push anything onto the dump at all. Note further that having an empty continuation -- nothing more left to do in the current function -- at the time you call another function, is a sure-fire run-time indicator of a tail-recursive call.

Again getting ahead of myself, it is worth noting that in a Lisp implementation with garbage collection, S, E, C, and D are all "roots" for the traverse to find things that are not garbage. Any substantial implementation will likely have other roots as well.

I indeed first implemented Pixie Scheme -- Wraith Scheme's predecessor -- as an SECD machine, according to the plan a few paragraphs up, with all four registers being pointers to push-down lists in Scheme main memory. That soon changed, however, in the interest of optimization for speed. Rather early in Pixie Scheme's development, I combined S and D into a true stack -- a large block of contiguous memory addresses outside Scheme main memory. I made a special register, R, as an accumulator, to hold the values that might normally go on top of the stack. Furthermore, while still developing Wraith Scheme as a 32-bit application, for similar reasons, I reimplemented the C register as a stack of pointers to executable code primitives instead of using a register pointing at a list of such primitives in Scheme main memory. Both of these stacks must be copied into Scheme main memory when it is necessary to save a continuation for any purpose, and every entry in each stack is itself a root for garbage collection.

When necessary to talk about these different stacks explicitly, I will refer to the combined S/D stack as the "Scheme machine stack", and to the stack that implements the continuation as the "continuation stack".

My SECD machine implementation actually has a number of other registers as well. I shall discuss many of them presently.

Also important was how the implementation identifies the kinds of objects it deals with. This issue spans several architectural layers: At a low operational level, it is undesirable to attempt to dereference anything that is not a valid pointer. Slightly higher up, the Lisp system may need to identify what kinds of objects it encounters in order to perform operations on them correctly and to display them properly, and perhaps to advise the user of errors in their use.

I decided early on that my Scheme implementation ought to have a low-level typing system that was as bullet-proof as I could make it, if only to save me from some of my own programming errors. Using some kind of tag to identify each object was the most powerful and extensible approach I knew of. I expected I was going to need a few bits for the garbage collector and for cdr-coding, and I did not want to have to keep masking off a few bits of pointers and such before I used them, so I implemented all the objects in my system as C structs containing two items.

The first item was the tag, which at the logical level is a set of bits with various meanings, among which is identifying what the second item of the struct is supposed to be. That second item was either a fundamental data element like a 32-bit integer, a 32-bit float, a character, or some other such thing, or else a pointer to something too large to fit in the struct itself. (The current implementation uses 64-bit quantities instead of 32-bit ones.) The fundamental objects are what would be called "rvals" in other programming languages; that is 'r' for "right", meaning things that go on the right side of an assignment operator. Many of my pointers corresponded to "lval"s, on the left side of an assignment operator, which represent addresses at which an rval may be stored, but some were a combination, such as a pointer to a cons cell, which is both a fundamental type and an indication of a place in memory where things may be put.

Since my struct encompassed pointers, data items, and things in between, depending on the tag bits, I coined for it the suggestive name "tagged aval", with the "a" standing for ambiguous, or perhaps for ambidextrous -- something that could be either an rval or an lval. The first line of actual code I wrote for my Scheme implementation was in fact the relevant C typedef:

typedef struct {

Tag t;

void *p;

} taggedAval;

which only becomes completely defined when I add:

typedef unsigned int UnsignedWord; typedef UnsignedWord Tag;

When contemplating those typedefs, it may help to remember:

Thus, I originally had Tag typedef'd only a byte long, but when I realized what the compiler was doing I declared it a full word, in the hope that some day I would find use for the extra bits. (I eventually did, but now I have changed the implementation to use 64-bit words, so I still have vastly more tag bits than I know what to do with.)

Most Wraith Scheme tagged avals are composed of two 64-bit words -- one for the tag and one for the aval, but there is a special kind of tagged aval called a "byte block", whose length may differ. A byte block is composed of a tag of type "byteBlockTag" immediately followed by one or more 64-bit words. The tag and all the words are adjacent in Scheme main memory. The first word past the tag -- the one that would be the aval in a more typical tagged aval -- contains an integer that is the number of 64-bit words, in addition to the tag, that the byte block contains. Thus for example, a byte block composed of five 64-bit words side by side will have a tag as word 0, will have the integer "4" in word 1, and will have words 2, 3, and 4 available to store data.

Tagged avals of type "stringTag" are used to store text strings, and have the same structure as byte blocks. A tagged aval of this type is in essence a byte block by another name.

I certainly wanted a more capable computer than my MPX-16 for my Scheme implementation. Its Intel 8088 processor could address more than the traditional 64 KBytes of memory, but Intel's early implementation of segment registers made it awkward to do so. Fortunately, a serious breakthrough machine had just appeared on the personal computer market, with a flat addressing system for a much larger memory space, and with an expandable hardware system that might actually allow MBytes, or even tens of MBytes, of RAM, for anyone rich enough to afford all those capacious memory chips.

The new computer was Apple's Macintosh II, with an early Motorola 68020 processor and an optional 68881 floating-point coprocessor, and with built-in hard disk options to 80 MByte. Mine came with an entire megabyte of memory, and I soon added four more megabytes. This gargantuan machine could surely handle a Scheme implementation. Stop laughing! Furthermore, Apple showed signs of supporting would-be Macintosh programmers with a serious development environment, Macintosh Programmer's Workshop. It wasn't Unix or TOPS-20, but after all, what could you expect from a PC? I had placed an order for one of these hot new machines as soon as they were announced. In consequence, I never did unpack my MPX-16 after I got settled in Santa Cruz.

The down side of using the Macintosh, of course, was having to cope with the frustrations of Apple's point-and-click graphical user interface (GUI). My computer use has always been heavily text-oriented, and I am a fairly fast typist. It always seemed like a waste of time to move my hands away from the keyboard to mess with a mouse, and when learning a new program it always took me forever to memorize what all the icons and symbols meant. (Even after more than thirty-five years using Mac-like GUIs, I still feel that way.) Furthermore, the programming interface to the Mac GUI was complicated and clumsy to the point of being Byzantine. But if an awkward user interface was the price I had to pay for a powerful machine, so be it; I do not regret being a Macintosh user, interface flaws notwithstanding, and I do acknowledge that my opinions on the subject are a minority viewpoint.

In any case, my decision to buy a Macintosh had a strong influence on the high-level architecture of my Scheme implementation. My dislike of mice and menus and my wariness about the programming interface made me shy away from writing a Mac-style application for as long as I could. That was a long time, because Macintosh Programmer's Workshop offered a way to write programs that used a text-based user interface and ran in something much like a traditional Unix shell. On the other hand, I knew I was probably going to have to bite the bullet eventually, and wrap something Mac-like around my Scheme machine.

Therefore, even while working up a user interface that in essence used stdin and stdout, I had to plan for something quite different in the future. So I wrote my early code with the calls to read and write text carefully encapsulated, to make it easy to substitute something fancier later on. I am not sure that anyone had clearly articulated the model/view/controller style of program design in 1987, but sloth, fear and loathing conspired to coerce me into writing my Scheme implementation in that manner, even without clearly understanding what I was doing.

That program structure paid enormous dividends down the road, when it came time to strip out the calls to the primitive Macintosh Toolbox of 1987 and replace them with an interaction with the much more sophisticated, much more powerful, and much more Byzantine Macintosh GUI of the early twenty-first century. It is quite possible that if I had been stereotypically enamored of the Macintosh Toolbox in 1987, I would have ended up writing a Scheme application so inextricably intertwined with it that it would have been all but impossible to port the code to the very different interface architecture of today.

(I hope no one takes me to task for calling Apple's software Byzantine. After all, it is worth something to have the might and majesty of the entire Byzantine Empire on your side.)

So I guess my advice to programmers who worry about maintaining proficiency and keeping up with the latest technology is, drag your feet and whine a lot. You will probably do just fine.

Henderson's SECD implementation of his virtual machine for functional programming got me used to thinking in terms of primitive operations for that construct; that is, used to thinking in the assembly language of the virtual machine itself. So when implementing that machine in a real programming language, on real hardware, to use as the architectural underpinnings of my Scheme machine, the question came up of how to distinguish the virtual machine level clearly in the code I would be writing.

Looked at in one way, such a distinction is an oxymoron. After all, the code I was writing was all C (later switching to C++), so what difference could it make? My answer was, that to get best use of the virtual machine required me to be able to see clearly when, where and how I was using the abstraction it represented in the code I created. There were no doubt going to be constructs from both higher and lower levels in the program architecture mixed in -- it was indeed all C -- but best practice required me at least to try to keep their use separate and in some sense orthogonal to the virtual machine instructions themselves. To do that well required me to be able to see those latter instructions clearly, at a glance.

My usual C/C++ coding style features identifiers composed mostly of lower-case letters, with either capitalization or underscores used to indicate word breaks, depending on what I had for breakfast that morning. To give the virtual machine assembly language a very different appearance and syntax, I decided to use tokens that were all upper-case, and to implement them as preprocessor macros -- the latter largely to get away as much as possible from superfluous parentheses and semicolons.

For example, the macro to push the contents of the accumulator of my Scheme machine onto the stack -- that is, onto the 'S' of the SECD machine -- is, rather unsurprisingly ...

PUSH

... which the preprocessor expands into ...

( *(--S) = R );

... in which R is the accumulator (which holds a taggedAval), and S is a pointer into a big array of taggedAval that represents the stack. (Yes, there are checks for stack overflow and underflow here and there.)

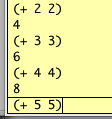

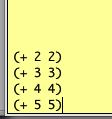

What I ended up doing with stuff like that was implementing Scheme operations in terms of the virtual machine assembly language. For example, here is how my Scheme implements the Scheme procedure "+". Don't worry for now about how the different macros expand (and I remind you again, that it is all ultimately C or C++), just look at it as a sample of assembly-language programming in a simple machine that uses a run-time stack for arithmetic operations.

/**** addCode -- Add the numbers stacked and return the sum,

except that if there are no numbers, return zero. ****/

PROC( addCode )

ZERO_FIXNUM_P

JUMP_FALSE( oneOrMore )

CONTINUE

oneOrMore:

ONE_FIXNUM_P

JUMP_TRUE( noMore )

EXCHANGE( R, STACK( 1 ) )

ADD

SWAP

DECREMENT_R

JUMP_LABEL( oneOrMore )

noMore:

POP

CONTINUE

END_PROC

The procedure starts out with an arbitrary number of addends pushed on the stack, with the actual number of addends in the accumulator. (I will defer for the moment, discussion of how the system type-checks its arguments, and how the stack and accumulator get set up that way.) It returns the result of all the adds in the accumulator.

Thus if you had entered the Scheme expression "(+ 1 -2 3 456.78e9)", the accumulator would start out containing a tagged aval that represented the exact integer 4 (how many addends there are), and from the top down, the stack would contain tagged avals for 456.78e9, 3, -2, and 1, and then anything else that might have been on it on it before the addends got pushed. I suspect you can see how "addCode" works, at the virtual machine level, without having to know how it expands to C in detail. That is what I intended by setting up the macros for virtual machine assembly language.

To see how good this abstraction is, and how useful, consider: In the thirty-odd years since I wrote "addCode", my Scheme implementation has changed from a pre-ANSI-C program written for a 32-bit architecture, with three numeric data types (32-bit fixnum, 32 and 80-bit flonums), to a C++ program written for a 64-bit architecture, with four entirely different numeric data types (64-bit fixnum, 64-bit flonum, complex (containing two 64-bit numbers), and rational (ditto)). Yet the only change in "addCode" came when I introduced the macro "SWAP" as an abbreviation for "EXCHANGE( R, STACK( 0 ) )". The point is, that even with all those lower-level changes in the last two decades, the virtual machine itself did not change -- or at least, the part that deals with simple stack-based arithmetic operations did not change -- and the representation of Scheme machine operations in terms of simpler primitives of the virtual machine remained constant.

The present source code for Wraith Scheme contains some 10000 lines of virtual-machine assembly-language routines like "addCode", and many of them have been unchanged since the earliest days of Pixie Scheme, in the late 1980s. The relative constancy of this body of code over time has been a great contribution to the maintainability, extensibility, and portability of the implementation.

I spent a fair part of the 1980s and 1990s writing microcode professionally. That experience also contributed to my ideas about the architecture of my Scheme implementation.

Technical Note: "Microcode" is a layer in the software architecture that runs between "machine language" and hardware. A '1' in the binary expression of a microcode instruction typically means that five volts -- or whatever -- gets applied to a particular circuit of the processor during that clock cycle. A '0' grounds the same circuit.

Every machine-language instruction that a microcoded processor encounters causes a corresponding small program of microcode to run, which actually does what the machine language instruction indicates. In that sense, the microcode is the actual "machine language" of the underlying hardware: The microcode is used to implement a virtual machine, which is the machine that an assembly-language programmer or a compiler-writer sees; it is the machine that is described in the assembly-language manual, and the like.

My colleagues used to say of me, "Jay Freeman -- where hardware meets software -- not a pretty sight!" ... and that was on days when they were polite.

Processors do not have to be microcoded, of course, but during that era, many were. I worked on microcode for for an array of custom bit-serial processors that Dick Lyon developed for speech analysis at SPAR, and later for MasPar's highly parallel single-instruction multiple-data (SIMD) machines, the MP-1 and MP-2.

The principle benefits of my microcode experience were that I was no stranger to the idea that there might be yet another layer of software between assembly language and the machine, and that I expected that some of that software might be very complicated. I suspect that many programmers will look at assembly language and think that what has to happen to make any given instruction work is simple -- take the "PUSH" example in my Scheme machine's assembly language, described above. That isn't so. For example, the MasPar machines that I worked on had assembly-language routines for many floating-point operations. I recall that the one for 64-bit square-root took almost 2000 microinstructions to implement. (It was an unrolled loop, with many instructions per bit of the result. The main hardware resources we had to work with were a shift register and a simple, four-bit-wide ALU.)

Or consider "ADD", in the implementation of Scheme's "+" procedure in the previous section. That "assembly language instruction" (repeat one more time, with feeling, "It's all just C!") might have to deal with operands of different sizes or types -- it could be asked to add a fixnum to a float, for example -- and it might not know till it was done what kind of numeric type it had to use to hold the result. Furthermore, it has to deal with Scheme's "exact bit", and with the possibility of nans, infinities, and underflow. That is not all simple.

My Scheme implementation's microcode, of course, is not implemented on hardware. It is probably most sensible to think of it as being implemented "on" C++.

The point was, that my predisposition to consider that microcode might be (a) there and (b) complicated, made it easy to dodge the occasional temptation to introduce complexity at the level of the virtual SECD Machine. I had no objection to using a complicated microcode routine to implement what appeared to be a simple and straightforward assembly-language instruction; I was used to that.

Furthermore, the use of a microcode layer in the architecture factors complexity into separate domains, and thereby makes it more manageable: Architectural layering serves the "KISS" principle (Keep It Simple, Stupid), by separating one big complicated mess into many smaller and simpler ones, with well-defined connections between them. Thus, the virtual-SECD-machine implementation of "+" can use "ADD" when it needs to, without worrying about how it works, and "ADD" can do its job without worrying about how many addends there are left to go and how they got set up on the stack. Thus when all is said and done, the user can write Scheme programs, and use "+" with fair confidence that it will behave as the Rn report specifies.

One further trick about microcode had caught my fancy, and influenced my Scheme implementation. I had read about one of the early Xerox workstations -- I think it was the Dorado, but I do not remember for sure -- that had microcode that could trap to high-level software. That is, when the microcode hit something it could not handle, it could run a routine written in assembler or a compiled language to help out. In the same way that I say, "It's all just C", microcoders who write that style of hardware microcode might say "It's all just electrons".

I used that approach in the code I was writing, regularly. The "microcode" routines often include SECD Machine assembly-language instructions, and some of the "assembler" routines include specific calls to large C/C++ routines that are not wrapped up in all-caps macros.

I frequently use the term "Scheme machine" to refer to the thread of a Wraith Scheme process that is running Wraith Scheme's read/eval/print loop, and to the code that is running there. The Scheme machine is the heart of Wraith Scheme: it is the interpreter that takes text strings with lots of parentheses, and processes Scheme procedures and special forms based on what they contain. You can think of the user interface, whether it is something as simple as stdin/stdout or as fancy as the canonical Macintosh GUI, as merely a collection of support infrastructure for the Scheme machine, to feed it coal and get rid of ashes and exhaust fumes.

I have tried to keep the Scheme machine as independent of the user interface as possible. The idea is to have the interface between those two software components of a Wraith Scheme implementation clearly visible, with the details of each well hidden from the other, both at the programming interface and at program-building time. Doing so is good design practice in its own right, and also facilitates possible future ports of Wraith Scheme to other interface architectures.

The notion of abstracting the Scheme machine away from the rest of the program that uses it has been extremely powerful. As I write these words, this Scheme machine has run on seven different processor hardware architectures, with six different user interfaces. What is more, the same source code has compiled in two different programming languages.

(The processor architectures are Motorola 68XXX with 16-bit word and 24-bit address space, Motorola 68XXX with 32-bit word and 24-bit address space, PowerPC III, Intel x86 32-bit, Intel x86 64-bit, the the ARM-class processor used in the early iPad, and Apple's recent ARM-based proprietary processors ("Apple silicon"). The user interfaces were those of the three different Pixie Schemes, that of Wraith Scheme using the Macintosh GUI, Wraith Scheme with a stdin/stdout user interface, and Wraith Scheme with an ncurses interface. The bit about two different programming languages is stretching a point, but only a little: The original source code was written and compiled in pre-ANSI C, but when I ran it through the GNU C++ compiler it basically compiled straight away, with no fatal errors.)

In my opinion, a major reason why the Wraith Scheme / Pixie Scheme project did not die at birth was that I stumbled on a software development plan that did not require that the whole program get written and start to work before any of it could be tested. An all-at-once approach would likely have resulted in me having to deal with thousands of bugs all at once, long after the time when the faulty code that produced them was fresh in my mind. Projects have failed for such reasons, and smart programmers have turned in their resumes when asked to work on code that was developed that way. I came up with a rather ad-hoc alternative development plan for Pixie Scheme that avoided those pitfalls. In broad terms, the plan was as follows:

LOAD( 2, R ) ;; Put integer 2 in the accumulator, "R". LOAD( 2, B ) ;; Put integer B in the "B" register (which no longer exists). ADD ;; Add B to R, and leave the result in the accumulator. DISPLAY ;; Print out the contents of the accumulator.

That code results in a readable and automatically checkable printout of "4". The point is, it permits testing of Scheme primitive operations without a Lisp-style storage allocator, without a garbage collector, and without any of the mechanisms of a read-eval-print loop.

By this time, I had a large automated regression test suite of tests of Scheme primitives, that all ran in a text shell under control of a simple shell script. I could diff the results against "gold" files to verify that things were still working, and could easily add more tests as I developed new primitives.

I am sure that there were many other possible ways to bring up Pixie Scheme one piece at a time. The point is, that I used one of them! The code did not all have to start working at once, and it was much easier to get it going in small increments.

When it came time to reimplement Pixie Scheme as Wraith Scheme, I used the same general kind of approach. I tried to work out incremental development plans for each major change, and I made sure to keep a substantial suite of automated tests handy, and to run it frequently.

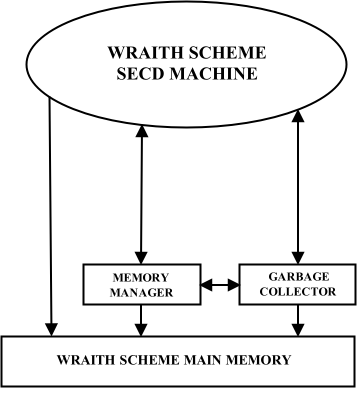

The architecture of Wraith Scheme is complicated. A complete diagram of it would probably have so many pieces that no one would dare to look at it. In this section, I provide a number of different diagrams, with explanations, that emphasize different parts of the program. All of these diagrams and explanations are simpler than reality, but I hope they will help.

A key issue in any Lisp implementation is how it interacts with memory. I do not mean hardware memory, or even the address space of the language in which the implementation is written -- those are at a lower architectural level. This memory -- Scheme main memory -- is the address space of the SECD virtual machine that underlies Wraith Scheme. It is a large area that contains only Lisp objects -- tagged avals, in the case of Wraith Scheme. Other places in that machine may contain tagged avals: The registers of the machine do, as do the stacks that implement 'S' and 'C', but any pointer in any tagged aval must point into Wraith Scheme main memory.

The issue of interacting with memory becomes even more complicated when several separate Wraith Scheme processes interact with memory that is shared between them. So for a start, let's assume that only one Scheme process is running, and ignore the stuff for coordinating between separate Scheme processes.

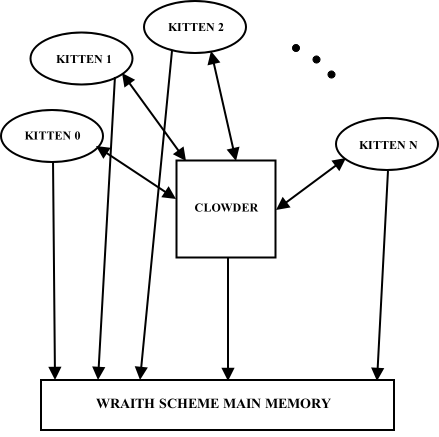

One Wraith Scheme process interacting with Scheme main memory,

using the memory manager and the garbage collector.

In most circumstances, any portion of the Wraith Scheme code may have access to any part of Wraith Scheme main memory, to read or write it, or for any other purpose. That access is represented in the figure by the direct arrow from the oval on top to the wide box at the bottom. The arrow is single-ended because the "memory" is a dumb object -- implemented as a C++ struct comprising a huge block of "flat" memory and a small amount of auxiliary information; it has no procedures of its own, and no way to invoke code in any of the other architectural blocks shown.

There are two exceptions to direct access. The first has to do with allocation of memory. Wraith Scheme main memory is empty when it is first created, at the time when the Wraith Scheme process is initialized. An object called the memory manager -- implemented as a singleton instance of a C++ class -- contains routines to allocate space of any desired size in Wraith Scheme main memory, and return a pointer to it to the caller. The rest of Wraith Scheme allocates memory by using the memory manager as an intermediary. The memory manager also includes procedures to initialize memory, to provide information about it, and to test its contents for consistency and correct form.

The garbage collector -- another singleton instance of a C++ class -- also has substantial responsibilities for dealing with Scheme main memory. Basically, when the memory manager runs out of allocatable memory -- that is, when an allocation request is about to fail for want of memory -- it asks the garbage collector to collect garbage, and attempts the allocation again afterward. (If there still is not enough memory, Wraith Scheme will print an error message and return to the top-level loop.) The garbage collector uses some of the memory manager procedures for its work. Other code may also ask for a garbage collection, even when there is plenty of unallocated memory left.

I should mention that putting the label "Wraith Scheme main memory" on a single block in an architectural diagram is a substantial oversimplification. Wraith Scheme uses a form of garbage collection algorithm that requires two main memory spaces, only one of which is in use at any given time. Furthermore, it uses substantial additional blocks of tagged pointers, and of other memory, for other purposes in memory management and in garbage collection; we will get to those later.

The interaction of Wraith Scheme with main memory becomes much more complicated when more than one Wraith Scheme process (kitten) is involved. There are critical sections to be locked, synchronizations to be achieved, and deadlocks to be avoided. For such purposes, I created an entity called the clowder to mediate and coordinate interprocess communication and interprocess competition for resources. ("Clowder" is a slightly archaic term for a group of cats.)

Several Wraith Scheme processes interacting with Scheme main memory,

emphasizing the role of the clowder.

Thus for example, the clowder mediates between simultaneous requests by different kittens to allocate memory by allowing only one to occur at a time, locks access to individual objects in Scheme main memory during critical sections, and schedules and synchronizes the collection of garbage from the separate, private registers and stacks of each kitten.

The clowder does not actually block any Wraith Scheme process's access to Scheme main memory; rather, it deals with simultaneous requests for memory access, or near-simultaneous requests, by granting only one of them. It is up to the kittens -- actually, to the C++ code which implements the Wraith Scheme processes -- to respect those grants and denials of requests for access.

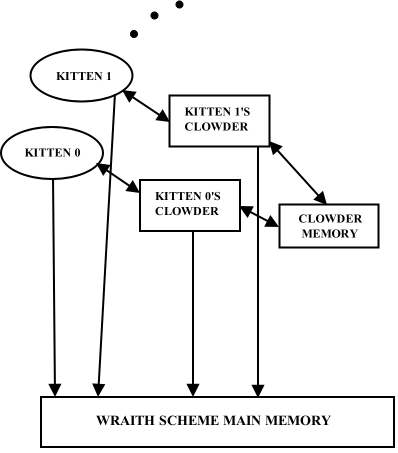

Although the clowder is logically a single separate entity which communicates with Wraith Scheme processes and with Wraith Scheme main memory, its implementation is a bit different.

Several Wraith Scheme processes interacting with Scheme main memory,

showing clowder memory and separate clowders.

Each separate Wraith Scheme process -- each kitten -- has as part of the process its own, separate instance of a C++ class called Clowder. Each such instance communicates with clowder memory, with Wraith Scheme main memory, and with its kitten.

Wraith Scheme main memory and clowder memory are created and initialized by kitten 0 -- the MomCat -- which is always the first of a group of parallel Wraith Scheme processes to be launched. These memory regions are group resources -- mmapped -- so that all kittens may access the same content. The other kittens need only be apprised of the location in memory of these regions, in order to use them.

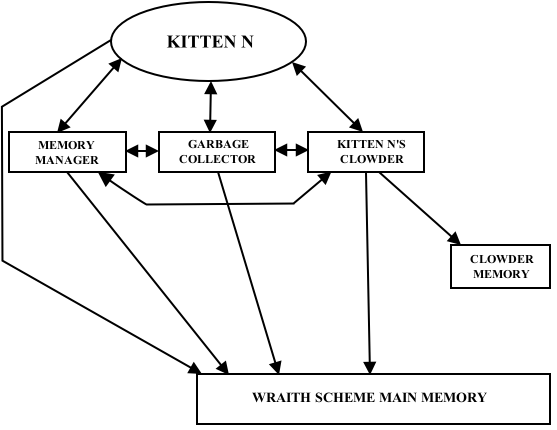

There is an even more detailed view of this part of the architecture that may be useful. Let us now look more closely at just one kitten out of many.

Closeup of one of many Wraith Scheme processes interacting with Scheme main memory,

showing its clowder, memory manager, and garbage collector.

The point of this rather messy picture is to show that the given Wraith Scheme process -- kitten N -- must use its memory manager and garbage collector to interact with Scheme main memory, as before, but with an additional complication: Since that memory is now shared with other, parallel, Wraith Scheme processes, all components of the process shown must use that process's clowder to arrange for locking objects for use in critical sections and for synchronization. Only the clowder's procedures talk directly to the shared clowder memory.

By way of example, if a given Wraith Scheme process wishes to read or write some object in Wraith Scheme main memory, it must first use one of the Clowder procedures to obtain a lock on that memory object. Once the lock is obtained, the Wraith Scheme process does what it needs to with the memory object in question, and then calls another clowder procedure to release the lock. This mechanism is a protocol -- it requires the developer (me) to make sure that the C++ code that implements the Scheme machine makes the proper calls to the Clowder procedures that establish and release locks.

How "typed" things are, and how typing works, varies considerably at different levels in the Wraith Scheme architecture:

Readers familiar with modern object-oriented programming languages will likely be puzzled by the Wraith Scheme source code. They will find lots of situations where I use many copies of certain data structures, with carefully controlled procedural access to their content. That sounds like a good reason to write code using some kind of object, yet I haven't used C++ classes in any of the likely places. What is more, the only places where I did use C++ classes were for a few rather large and clunky things that mostly show up as singletons, which are not usually high on one's list of stuff to implement with classes. What is going on?

Wraith Scheme is written in an object-oriented manner, but most of the code dates from a time when I did not have a suitable object-oriented language to write in. (The GNU folks were working on g++, but it was still pretty buggy, and Apple had not ported it to Macintosh Programmer's Workshop. Apple did have a good object-oriented version of Pascal, but I had a feeling that Pascal's days were numbered.) Thus I dealt with objects in an earlier style. Most of the key objects, that are used widely in Wraith Scheme, were originally implemented as instances of particular kinds of C structs, with access to their content by way of functions whose arguments frequently included a pointer to a specific instance of the struct in question. Some structs contained places for pointers to functions, so that I could write code like

thing->function( arg1, arg2, ... );

This is of course the kind of code that an early implementation of C++, based on macros and preprocessing, would have generated.

I left the original code in this form even after I got hold of a functioning C++ compiler, with no more change than substituting inline functions for macros, and changing a few struct slots to be unions in order to cope with the rather more strict casting rules of C++. There were several reasons why I kept the old code and coding style:

There are some real C++ classes in the Wraith Scheme source code, but as I write these words there are only four of them. There probably should be more. The history here is that I originally used the common practice of encapsulating big blocks of related code in separate files, each with a header that defined the public interface to its functionality. When it came time to upgrade my Scheme implementation from Pixie Scheme to Wraith Scheme, it was appropriate to refactor several of those files, and it was natural to turn them into separate classes at the time. I of course also expect to use classes for new large blocks of related code, but there has only been one of those so far, for the clowder.

There are other, smaller blocks of code in Wraith Scheme which might reasonably also be converted into classes, but I haven't gotten around to doing that yet.

All the "real C++" classes are used only as singletons. (Remember, that means one instance per Wraith Scheme process, but there may be many communicating Wraith Scheme processes active at the same time.) Each is discussed in detail elsewhere herein, but in summary, they are:

That is not to say that the view and controller are vanilla in entirety. They contain some serious weirdnesses, discussed elsewhere in this document. Yet their eccentricities are tidily packed up in a reasonable object-oriented piece of code, with only a few loose ends to hint at what is going on inside.

In brief, I have set up Wraith Scheme's build, test and software-archiving operations to be handled by the Unix "make" utility, with several Makefiles and many make targets. I use "git" for source code control. I invoke Apple's Xcode development system to build the version of Wraith Scheme that has a standard kind of Macintosh user interface, by means of "xcodebuild", launched as a Unix command from within make. (There are also make targets for a couple of other Wraith Scheme versions, that do not involve using Xcode.) For the record, in case you didn't know, "make", "git", "Xcode", and "scodebuild" are programs well known within the Unix or Macintosh developer communities.

There isn't any provision for configuring the Makefiles to run in more than one hardware/software environment; to the best of my knowledge, Wraith Scheme has only been built and run on Macintosh computers so far. There isn't much local configuration to be done when switching from one Mac to another, either. There are, unfortunately, some absolute paths here and there in the tests, but they need to be there. They are used in tests that verify that the Scheme primitives that deal with pathnames handle absolute paths correctly.

On my own computer, the name of the directory containing all of the material for the 64-bit Wraith Scheme project is "WraithScheme.64". The directories within it that developers will need to know about, that I will possibly mention elsewhere in this document, are listed below, with short comments about what is in each one. Anyone wishing to try building or developing Wraith Scheme on his or her own might reasonably be advised to duplicate this directory structure as a starting point.

WraithScheme.64 -- contains the whole project WraithScheme.64/ArmTestResults -- test "gold" files which are different in the arm64 architecture WraithScheme.64/"Auxiliary Directories" WraithScheme.64/"Auxiliary Directories"/CocoaShadowDir -- target for a partial snapshot used in backing up the project WraithScheme.64/CocoaSource -- source and header files for the Objective-C part of Wraith Scheme WraithScheme.64/ExtraTests -- one of several directories containing tests WraithScheme.64/include -- include files for the C++ part of Wraith Scheme WraithScheme.64/SchemeSource -- Scheme code used in Wraith Scheme WraithScheme.64/SchemeSource/Compiler -- Wraith Scheme's simple compiler WraithScheme.64/SchemeSource/TestFolder -- another directory of tests WraithScheme.64/source -- source files for the C++ part of Wraith Scheme WraithScheme.64/Tests -- the main directory for Wraith Scheme tests WraithScheme.64/Tests/TestFolder -- one more directory of tests WraithScheme.64/WraithCocoa -- what Xcode thinks of as the project directory for Wraith Scheme; contains many resources WraithScheme.64/WraithCocoa/ColoredLights -- images used for the blinking lights on the Wraith Scheme Instrument Panel, and for the sense lights WraithScheme.64/WraithCocoa/English.lproj WraithScheme.64/WraithCocoa/English.lproj/SampleSourceCode -- sample programs included in the distribution WraithScheme.64/WraithCocoa/English.lproj/Tutorials -- tutorials to help with learning Wraith Scheme WraithScheme.64/WraithCocoa/English.lproj/"Wraith Scheme Help" -- the main directory for Wraith Scheme's on-line documentation WraithScheme.64/WraithCocoa/English.lproj/"Wraith Scheme Help"/HelpImages -- images used in the Wraith Scheme Help document WraithScheme.64/WraithCocoa/English.lproj/"Wraith Scheme Help"/InternalsImages -- images used in the Wraith Scheme Internals document

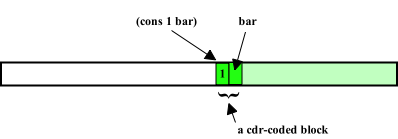

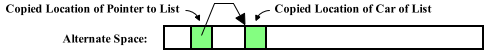

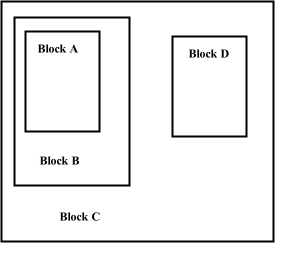

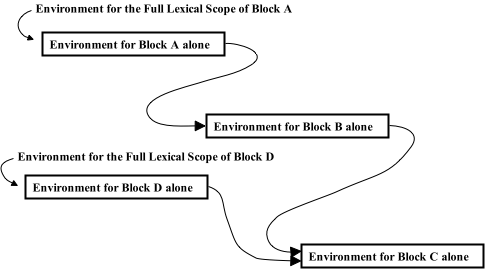

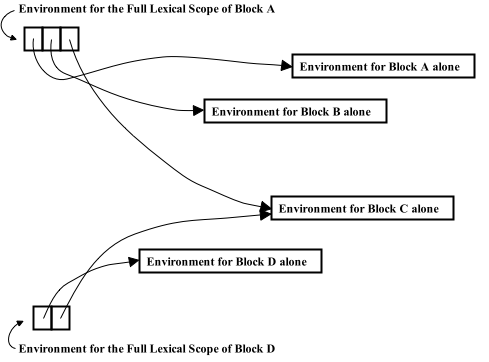

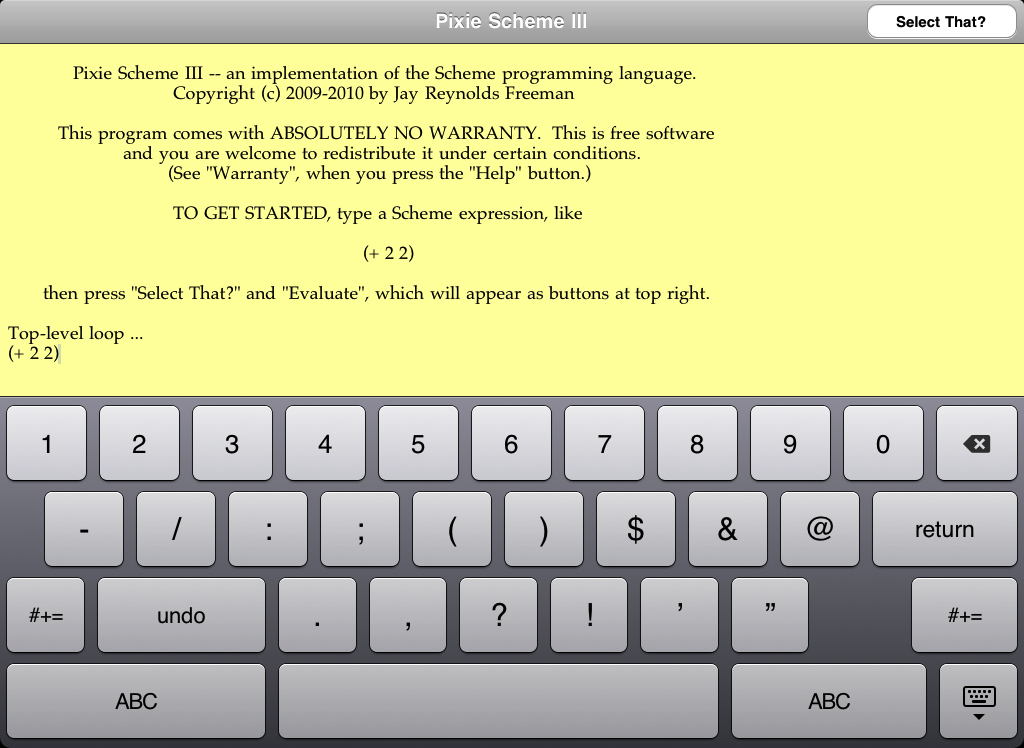

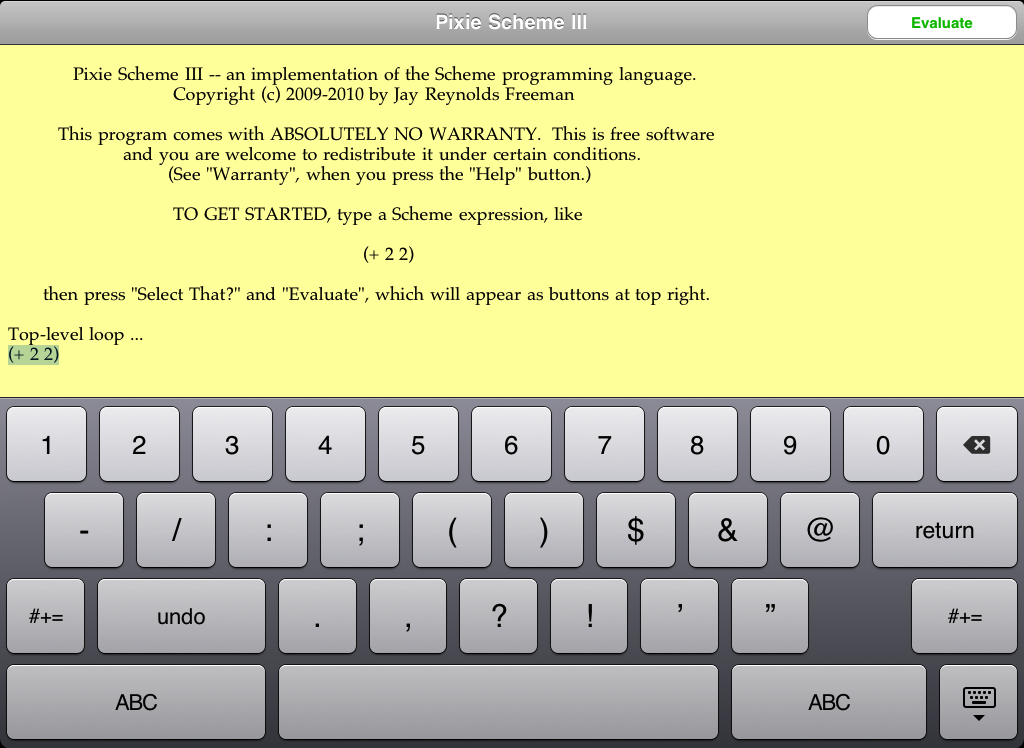

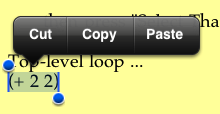

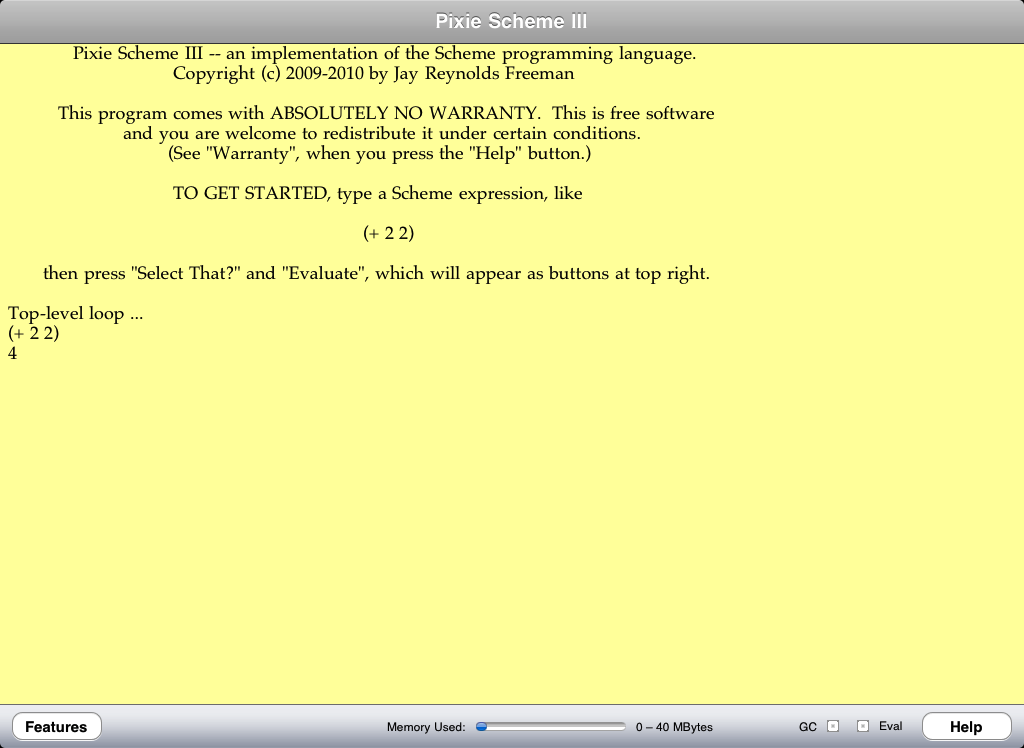

There is also a similar directory tree for the Pixie Scheme II project.